Why the EU Cyber Resilience Act Will Catch US Software Companies Off Guard

Most US software regulations are built around intent. The EU Cyber Resilience Act (CRA) is built around outcomes. That difference is why 2026 will feel like a shock for many US companies selling softw

February 19, 2026

The EU Cyber Resilience Act (CRA) requires outcomes, not intent. It doesn’t ask whether you tried to be secure. It asks whether you can prove you are secure. There are no vibes. No hand-waving. No “reasonable effort” clauses.

CRA Is Not “Another Framework”

The European Union is working to make the software-driven world more secure. It requires manufacturers to take an active role in securing their software supply chain. This includes documentation tasks like producing SBOMs, using secure-by-design software development practices, and being accountable for risks from third-party and open source software. Perhaps most importantly, it requires manufacturers to actively manage vulnerabilities not just at the time of software creation, but for the life of the product (and then some). Manufacturers not only have to let customers know about vulnerabilities, but they have to offer internally-developed fixes to upstream open source projects.

The Real Cost of Organizational Sprawl

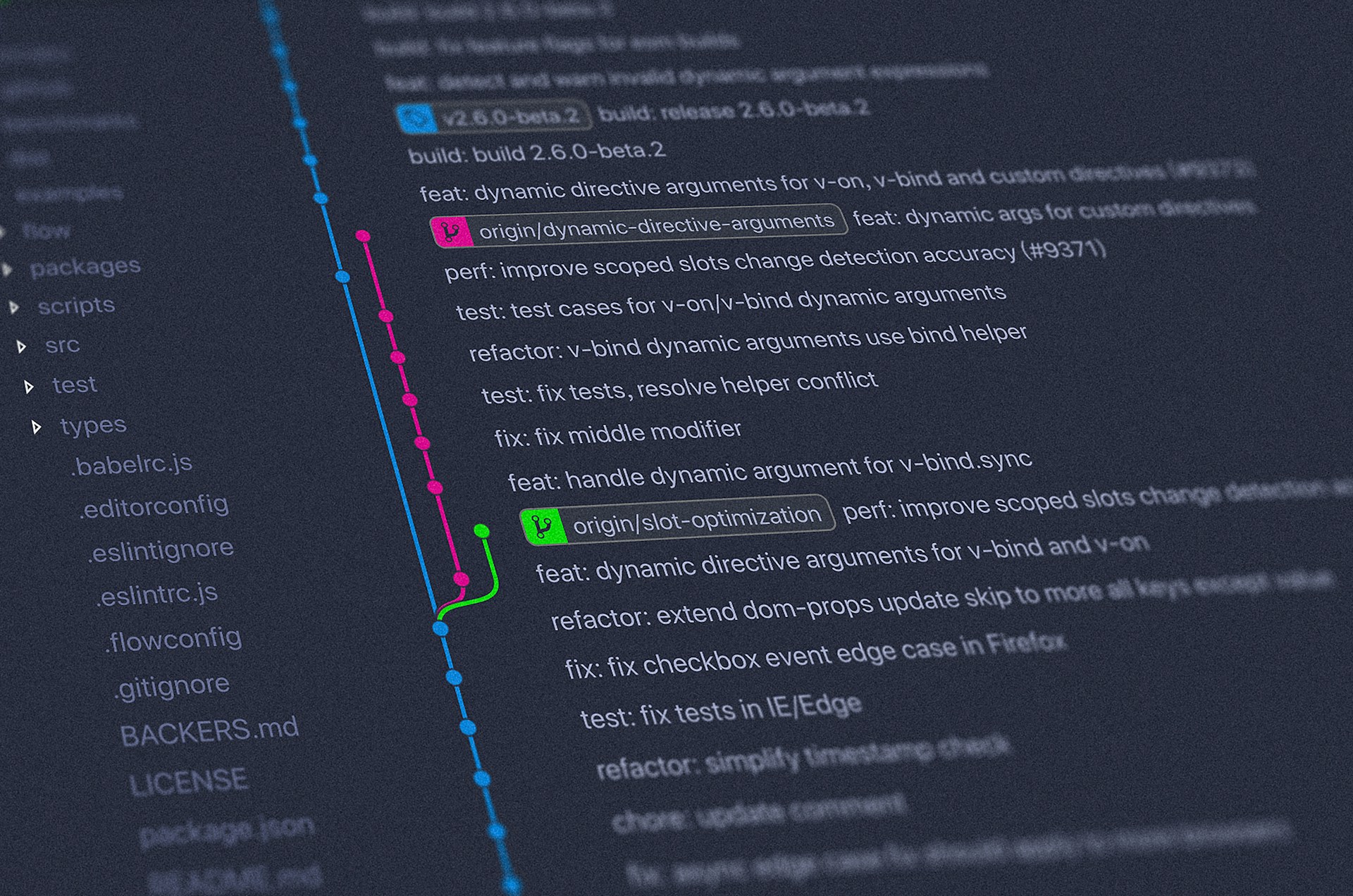

From our work helping organizations prepare for CRA alignment, the biggest surprise isn’t the requirements—it’s how unprepared internal systems are to meet them. CRA compliance exposes a problem many companies have ignored for years: every team builds software differently.

Teams choose different dependencies, build in different pipelines with different tools, and use different definitions of “secure.” That diversity empowers developers, but when you try to audit it, you realize how much of an organizational liability it is.

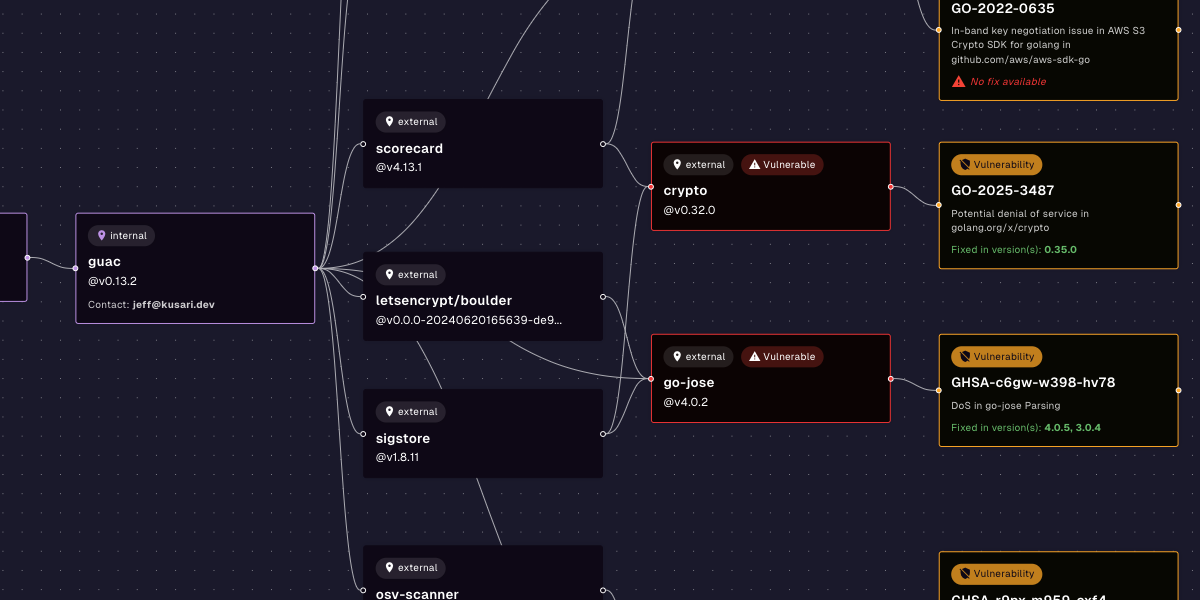

We consistently see organizations that can’t answer basic questions about what they ship. They don’t know if they’re exposed to a particular vulnerability. Once they figure out that they are, they’re not sure where the vulnerable versions are running. Every incident becomes an ordeal.

The CRA doesn’t create these problems. It simply makes them impossible to ignore.

Our recent AppSec in Practice research makes this gap measurable. Only 28% of teams report strong visibility into transitive dependencies—where the majority of inherited risk actually hides. Most organizations aren’t struggling because they don’t care about security. They’re struggling because they don’t have systemic insight into what they’re shipping.

That lack of visibility isn’t just theoretical risk. Nearly 47% of respondents spend five or more hours per week responding to software supply chain security incidents. That’s not proactive risk management—that’s constant reactive triage. Under the CRA, that operational drag becomes regulatory exposure.

Get the full AppSec in Practice research report for free.

Standards Become Survival Tools

This is where frameworks like the Open Source Project Security Baseline matter. The Baseline provides a pragmatic roadmap for standardizing how software is built and secured—without dictating specific tools or architectures. Standardization reduces cognitive load for developers, enables automation, reduces the audit burden, and speeds up response when issues arise.

Like the OSPS Baseline, good standards specify outcomes, not methods. Empowered with clear expectations from consistent, well-documented standards, developers can produce acceptably-secure software the first time. This reduces frustration and time-to-value, allowing development teams to ship faster. Security becomes an enabler, not a blocker—exactly what modern software teams need.

What Smart Companies Are Doing Now

Organizations that are taking the CRA seriously aren’t waiting for enforcement. They’re getting themselves in position to be ready when enforcement begins. CRA-ready organizations are treating compliance as a systems-building exercise, not a documentation exercise. They’re building shared dependency graphs, ensuring build and ship pipelines align to security controls, and automating evidence collection.

In 2026, CRA compliance won’t be optional. But it can be manageable—if you build for it now.

Companies at the forefront aren’t guessing at what the CRA might require. They’re engaging with the people helping shape the open standards and implementation guidance that regulators and auditors will look to first. Kusari is directly involved in the open source security bodies and projects building practical paths toward CRA alignment. We’re not reacting to the regulation—we’re working upstream of it.

If you sell software into the EU, now is the time to understand what “provable security” really means in practice. Come talk to us. We can help you translate CRA requirements into operational reality—before enforcement turns uncertainty into liability.

FAQs

Q: Why is the EU Cyber Resilience Act such a big deal for US companies?

A: Because it introduces mandatory, auditable requirements. If you sell software into Europe, you must prove secure-by-design practices and open source accountability—regardless of where your company is based.

Q: Aren’t SBOMs enough?

A: No. SBOMs are a starting point, but without dependency context and continuous validation, they don’t show real risk—especially transitive risk.

Q: What’s the biggest mistake companies are making today?

A: Treating compliance as a documentation problem instead of a systems problem. Visibility and automation matter more than paperwork.

Q: How does open source factor into CRA compliance?

A: Open source is no longer “someone else’s responsibility.” If it’s in your product, you own the risk.

Q: Why are insurance companies relevant here?

A: Insurers are already refusing to underwrite AI-driven and software products without demonstrable security controls. They’re enforcing reality faster than regulators.

Q: What should companies do now?

A: Invest in:

- Dependency visibility (not just SBOMs)

- Standardized security baselines (like OSPS)

- Automation that scales across teams

Previous

No older posts

Next

No newer posts

.webp)

.webp)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.jpg)