Going Beyond Vibes with Kusari Inspector

Your security reviews need to be based on facts, not vibes.

June 25, 2025

You’re probably familiar with the term “vibe coding” — producing software by describing in natural language the characteristics of software and letting a language model (LLM) write the code. This can produce a functional — if not necessarily secure — application very quickly. This quick assist can give experienced developers the scaffolding to build out a more robust and secure application, or a quick prototype. For inexperienced developers, vibe coding enables the creation of “toy” projects to solve personal needs or to guide learning.

“Vibe coding” implies the existence of “vibe reviewing” — tossing code at an LLM and letting it find the problems. This can certainly be helpful, especially on single-developer projects. Even if it doesn’t catch everything, an LLM flagging some of the issues is an improvement. Just like the best writers benefit from spellcheck, the best coders benefit from code analysis. Even developers who start with security in mind still make mistakes or overlook issues. The sheer amount of dependencies and issues that exist make it unreasonable to expect scanning, checking secrets, checking and analyzing dependencies, etc. to happen without help.

The challenge with reviews

Code reviews cannot be left to vibes alone. LLMs do not think or reason the way humans do. They can detect patterns and offer statistically-likely solutions, but they cannot understand. They produce both false negatives (missing issues) and false positives (reporting issues that aren’t actually a problem). And, of course, they cannot be held accountable for their reviews.

Humans can be held accountable, but their reviews are imperfect, too. Tedious, repetitive tasks are particularly prone to mistakes when the brain goes on “autopilot” and the reviewer misses an issue. If the reviewer has a heavy workload, they may not have the time to research the web of dependencies to look for poorly-maintained, out of date, or vulnerable libraries. Humans certainly can’t keep everything in their heads; on average, 100 new CVEs are published every day.

Humans with the right skills aren’t always available, either. In a solo-developer project or a small company, there might be literally no one who can provide an expert analysis of the security implications of a pull request. In this case, AI-powered reviews provide an always-available reviewer that can provide immediate feedback. The challenge, then, is making sure that the reviews are of high quality.

How Kusari Inspector is different

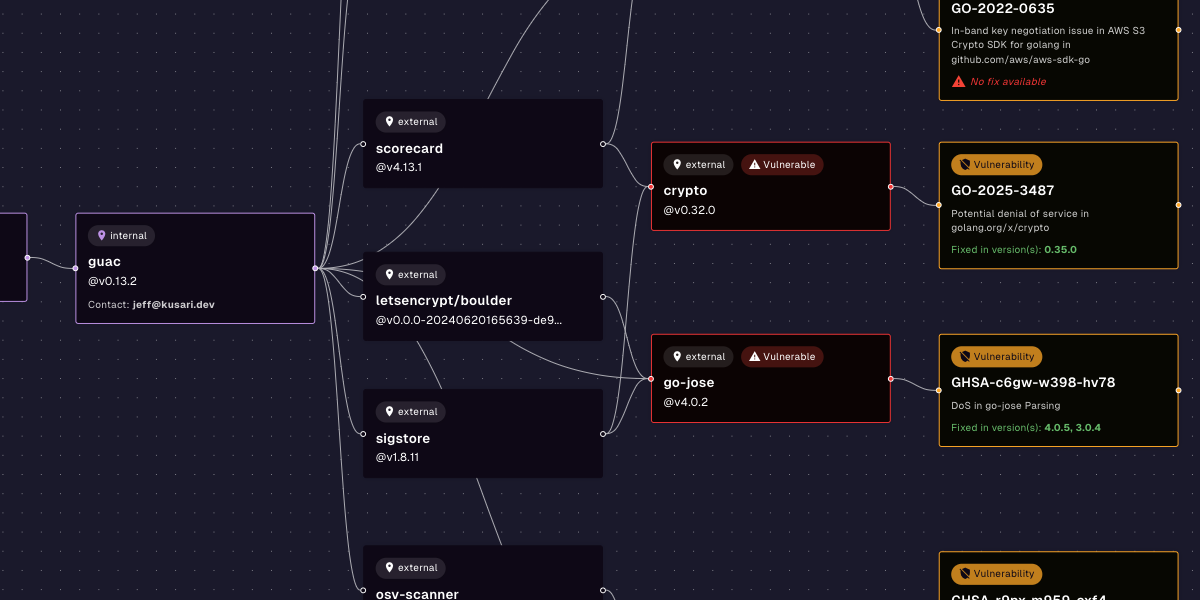

Unlike some other AI-powered review tools, Kusari Inspector does not rely on vibe reviewing. Other tools feed the code into an LLM and provide the developer with feedback directly from the AI model’s analysis. The “hallucination” problem in LLMs makes it hard to trust those reviews. That’s why we took a different approach with Kusari Inspector: it orchestrates a suite of tools specifically designed for security analysis.

By starting with commonly-used security analysis tools, Kusari Inspector’s analysis is based on vetted and trustworthy applications. The combination of conducting a dependency analysis and code review, simultaneously, means you get a more complete picture and greater context in minutes. The output of those tools is what Kusari Inspector analyzes to make a recommendation. It uses the code to provide context for that tool output, for example to check if a SQL injection vulnerability is in a test library or actively-used code. This makes Kusari Inspector’s analysis reliable, which we have seen over the last few months as we’ve been using it internally on our product development.

If you’re ready to analyze your pull requests based on facts, not vibes, install Kusari Inspector for free in just a few clicks.

Previous

No older posts

Next

No newer posts

.webp)

.webp)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.jpg)