Identifying Threats in the Implementation Phase

Many threats present themselves while implementing software. Here's how to find and address them.

April 30, 2025

This post is an excerpt from Securing the Software Supply Chain by Michael Lieberman and Brandon Lum. Download the full e-book for free from Kusari.

The Implementation Phase is the phase of the software development life cycle (SDLC) where the bulk of the engineering intended for eventual production deployment happens. As such, there are a lot of places here that can be exploited. To better protect against supply chain security attacks, you must understand the actors and systems involved in this phase. You then take this understanding and identify the problem areas most ripe for costly attack. With that understanding and our identification of attacks, you can then build mitigations against these attacks.

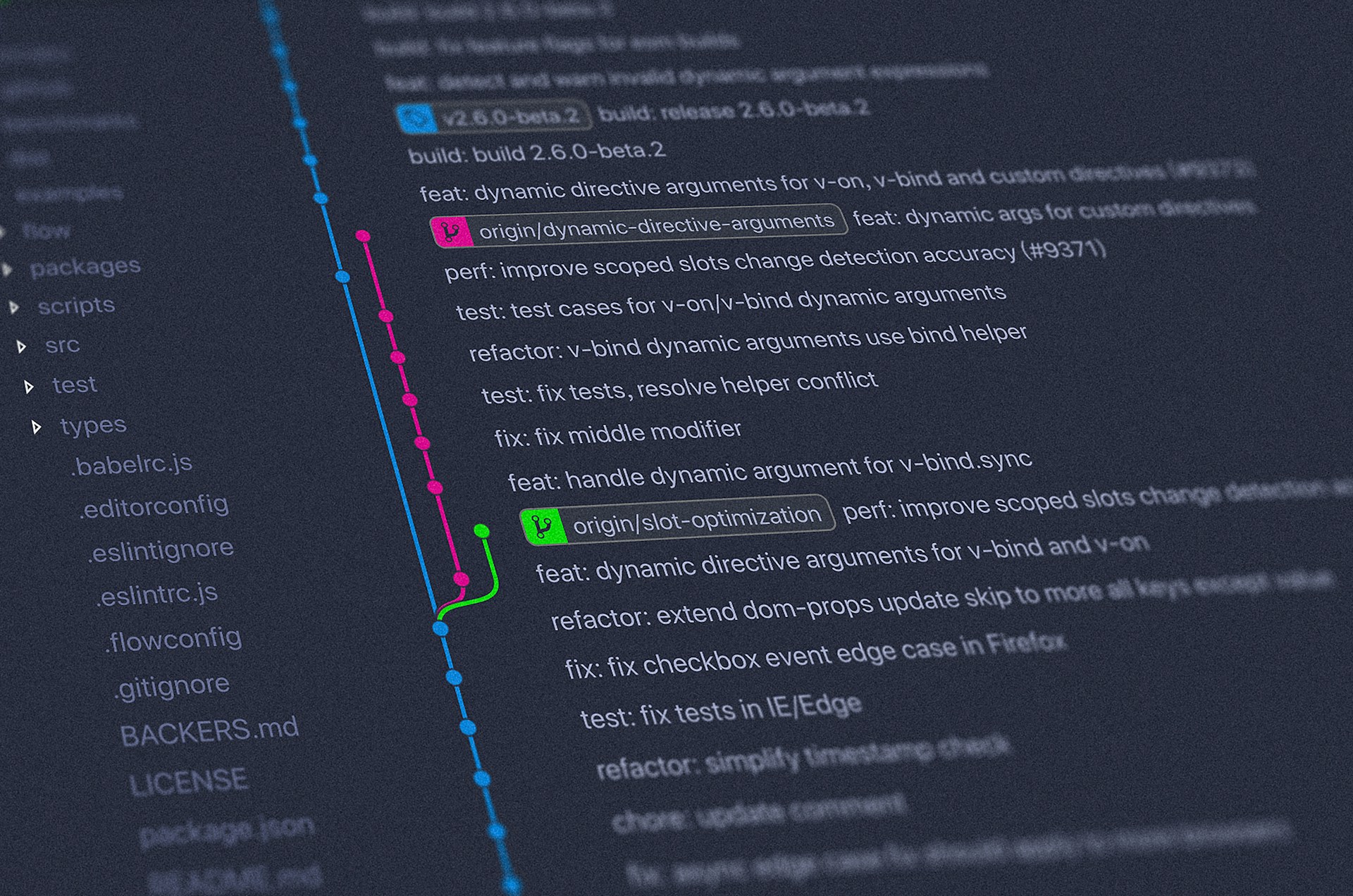

A prime target is the various interactions with the build systems like CI pipelines and upstream/downstream dependencies like artifact repositories and version control systems. This will highlight the need for a more secure way of developing, building, and publishing code through what is called a “Secure Software Factory.”

Problems in the implementation phase

Long feedback loops

A common problem in the implementation phase is that the feedback loops are too long. Requiring a systems administration team to deploy and manage software even in non-production environments seems like a good idea; only a small set of individuals get privileged access to resources. It is also common to require segregation of duties ensuring that those who write the software can't approve and deploy their software and vice versa. However, implementing it this way leads to everyone cutting corners. The systems administrators don't understand the software and have limited knowledge of how it's supposed to be deployed and run.This means software engineers must deal with large turnaround times every time they make an update to the code.

A single-line fix might take a week or longer to test in a development environment in a situation like this. The same goes for QA, this time with a longer feedback loop. If QA discovers an issue, it will go back to the software engineers and those engineers will need to write fixes which then need to be tested in development environments and then flow back through to QA again. A simple issue and fix that could take minutes or hours to fix can take weeks or longer to make it back through the QA testing phase.

Error-prone processes

Some of the human-driven manual processes are prone to error. Processes that involve qualitative and fuzzy assessments like final usability signoffs, architectural signoffs, and exceptions can be useful and efficient. But it is bad practice to operate manual processes that involve repetitive activities like deploying software to resources like Linux servers or container orchestration systems like Kubernetes. A systems administrator can make mistakes and there are already a lot of systems out there that can automate the deployment of software as well as automate access, and security and hit other requirements. Many of the signoffs that happen before a production deployment are also quantitative. Did the tests pass? If yes, why does the QA team need to sign off that the tests did indeed pass instead of having some way to assert that in a machine-driven automated fashion?

Silos

The roles and responsibilities across the teams make it difficult for folks to do their job. It makes sense to have a segregation of duties to ensure that no one actor has enough authority to do major damage, however by not implementing it efficiently, we end up with a situation where it’s ineffective anyway. A systems administrator who manually deploys a locally built artifact will not be able to perform due diligence on the security of the artifact in the first place. Systems administrators also tend to use shared machines — called “jump boxes” or “bastion servers” — to access resources across multiple environments. If malicious software was handed to a systems administrator for deployment, you will have a hard time knowing what has been affected. It could be everything up to and including the system administrator's workstation, the jump box, the development environment, and anything else accessed from one of the compromised resources. A systems administration team should be more focused on building out a platform for software engineers to deploy artifacts to development environments with a high level of security and minimizing blast radius in the case of a malicious artifact being deployed.

Addressing implementation problems

There are few automated security controls or security-focused systems in place. Even having a handful of key machine-driven security controls and systems in place can greatly reduce the incidence of or eliminate classes of attack. Some of the above-highlighted issues are security-focused, but others might just seem like inefficiencies or other non-security-related challenges. However, these inefficiencies can have knock-on effects on the risks and security of systems and the enterprise. In this post, I’ll explain why the challenges posed by these inefficiencies can open an organization to increased risk of supply chain security attack as well as make remediation from a successful attack more difficult.

As we look at how code flows from a software engineer’s workstation through version control to CI and build systems, is published to artifact storage systems, and finally deployed into production systems it is worthwhile to look at an actual example of what can happen. For this example, let’s assume we are writing a very simple Golang application. This code is being written, and then will flow through the various systems throughout the phase and then end up being deployed into a production environment.

The code below shows a trivial Golang hello world application. Its only feature is to print the string “Hello World!” What could go wrong? It turns out, a lot. Let’s imagine we write this application, we have it go through the CI and build systems, it goes through the various other gating mechanisms, and it makes its way into production.

package hello

import “path/to/printhelper”

func main() {

printhelper.Println(“Hello World!”)

}

We expect the program to be deployed into a production environment and to do what it’s supposed to — print “Hello World!”. However, when we deploy it into production, we see that it prints out “Goodbye World!” Oh no, a supply chain attack!

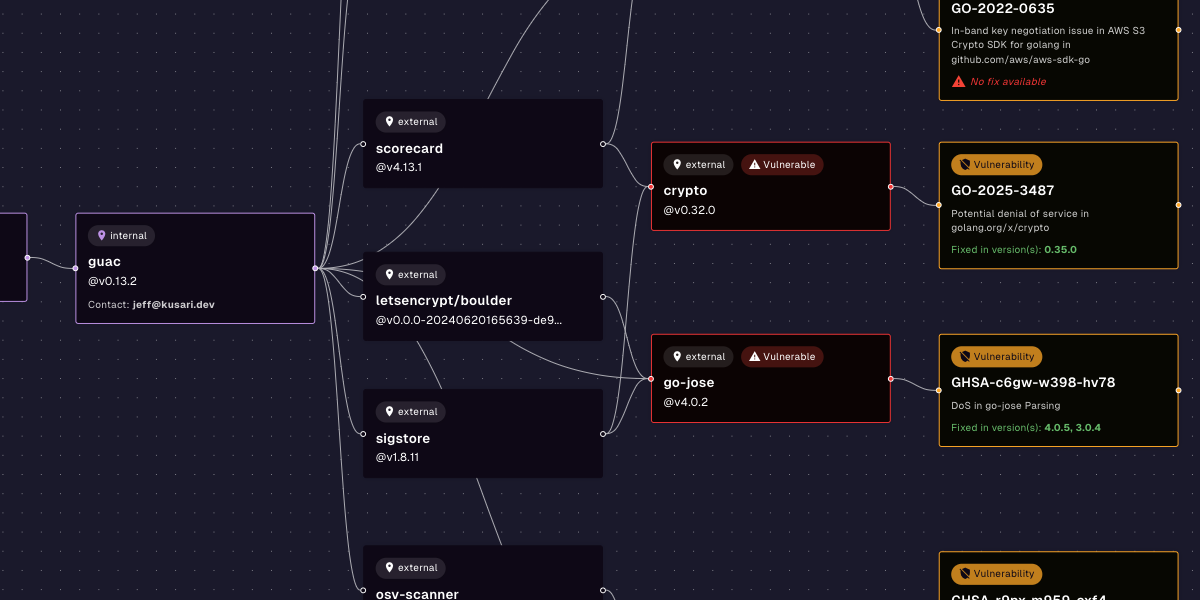

You might begin to see the problem with analyzing the implementation phase for supply chain attacks. If we look at all the events that could have happened to lead to this compromise, there are a lot of things that could have gone wrong and are hard to keep track of. For example, here are just some of the most common attacks that could have led to this compromise:

- Internal software engineer goes rogue and pushes the bad code

- External bad actor gets access to an authorized workstation and pushes the bad code from there

- Malicious tools or other dependencies on the software engineer's workstation modify code before being pushed to the version control system

- Malicious build tool or other dependency compromises the build pipeline for the project in the CI and build system

- The version control system gets compromised and code is modified before it makes its way into the CI and the build systems

- The CI and build systems are compromised

- Artifact repositories are compromised allowing the publishing of malicious code directly

- Systems and tools involved in the deployment process are compromised

- Existing vendor or open source software running in production is compromised

Some of the above attacks might be caught by Secure Bank’s existing systems and processes, but let’s see why that might prove to be difficult.

The above attacks are dangerous but the one that is the scariest is a compromise to the CI or build system itself. This is what we saw happen in the SUNBURST attack against SolarWinds. Someone using a bad dependency is still dangerous for all releases related to that project and any other project that utilizes the bad dependency, but a compromised CI system means all builds for all projects that happen are suspect at best and compromised at worst.

Now, let’s look at how the above attacks in the bullet points could have led to the compromise in more detail. Some of these attacks would be easier or harder to pull off in the real world depending on how an actual enterprise's IT environment is set up.

Bad actors

The Software Engineer writing the code could have been told to write the software to display "Hello World!", and they might have maliciously put "Goodbye World!" into the code. Inadequate code and security reviews on the software didn’t catch it, tests and QA didn’t catch it, systems administrators didn’t catch it, and final approvals didn’t catch it. This might seem far fetched, but as discussed above, when you have a ton of manual processes a lot of these things can slip through the cracks. An External Bad Actor getting access to the workstation and pushing out the bad code is a similar sort of attack that would require similar things not being caught for it to go off without a hitch.

System compromise

The same sort of thing could also happen if the version control system or artifact repository is compromised. The bad actor uses that as the vector to get in and inject malicious code or a malicious artifact. In this contrived example, it should be easy to catch, but you can imagine if it’s a single line of code, or even a few lines of code in a million+ line codebase it would be a different story. We see this thing happen with SQL injection attacks. It's a very common vulnerability, but often gets missed at every stage from the software engineer writing code to the QA Engineers not having adequate tests, etc.

Malicious tools and code dependencies have been known to change the underlying functionality of the application. Any piece of software on the software engineer’s workstation with access to the source code or built artifact on the workstation can modify it in any way they choose. Build tools more specifically can also be hard to detect when they’re malicious. They are supposed to be interacting with the source code and resultant artifact. It is easier to detect if a random application on the workstation modifies the source or artifact, because most applications — like messaging clients or presentation software — shouldn’t need to access source code or artifact. It is harder when the build tool is supposed to access the source code and artifact.

The build tool can change source code or swap out good dependencies with bad dependencies. It can also inject whatever it wants into the output artifact while it’s still in memory before the output artifact has even been written out to disk. A malicious dependency can often have similar behavior as a malicious tool. This is because in many languages and ecosystems, like npm for Javascript, installing a dependency can run arbitrary commands against the system it’s installing to. A dependency in many of these ecosystems can install malicious tools on the workstation or do something malicious at download and installation time.

In addition to this a dependency can just itself have malicious behavior. In the hello world example, we are using an internal library called printhelper. That library could be malicious and when told to print "Hello World!", the printhelper dependency has it print "Goodbye World!" All the things that have been shown to happen to a workstation could also happen in the CI and build systems.

Software that has already been compromised and is running in the production system can be used to compromise other production systems to which that software might have access. In addition to this, it is still common for monitoring, alerting, and even security software with high levels of privilege to be compromised and therefore can compromise other software deployed to them. For example, there's a known attack vector where a malicious or compromised piece of software uses LD_PRELOAD which can swap out shared libraries before running a piece of software. Most things in Golang are statically compiled and generally not susceptible to this attack, but if the “printhelper” library links to some other upstream C library that library could be replaced by a malicious one using a vector like LD_PRELOAD. Similarly, if systems or tools used in production deployments are compromised, they can either deploy artifacts from non-standard locations or modify artifacts during the deployment process.

CI and build systems

Now, it’s time to talk about the big set of attack vectors that is the CI and build systems. If the CI and build systems are compromised, all source code that goes through it can be manipulated and all artifacts that are built from it can be manipulated. There are usually only a handful of CI and build systems for an enterprise, with many centralizing to a single service. This means that a CI system compromise has the knock-on effect of potentially compromising every piece of software that is built by the enterprise. This has the further knock-on effect of that software compromising anything else it’s deployed to. It very quickly becomes difficult to know if anything hasn’t been compromised.

In addition to how bad a compromise of the CI system is, this system is vulnerable to many of the same kinds of compromises detailed above. It can have software on its servers compromised, it can have its dependencies compromised. The CI and build systems also tend to be highly privileged with access to many of the secrets required to sign software and access various other APIs and services. In addition to this, in some enterprises the CI system also is utilized with continuous delivery allowing for a seamless delivery process. If the CI system is compromised, it means the CI system would have access to not only compromise any software it wants but to also deploy that compromised software. Therefore, the CI and build systems are potentially the most important systems to protect. They are at the heart of the securing the SDLC.

Previous

No older posts

Next

No newer posts

.webp)

.webp)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.jpg)