AI and the Secure Software Factory

Artificial intelligence can help secure the software supply chain, but it also brings additional considerations.

June 4, 2025

This post is an excerpt from Securing the Software Supply Chain by Michael Lieberman and Brandon Lum. Download the full e-book for free from Kusari.

As artificial intelligence continues to evolve and mature, it presents both opportunities and challenges for secure software development. Let’s explore how AI could potentially be integrated into the secure software factory, as well as how the principles of the secure software factory could be applied to AI development itself. A word of caution though: the AI space is still quite immature. There are a lot of evolving techniques that enable AI to help with software supply chain security use cases as well as evolving patterns for securing the AI supply chain, but as of this writing, these techniques aren’t mature yet and tooling support is sparse.

Let’s start with how AI could help:

- Enhanced Code Analysis: AI models could be trained to identify potential security vulnerabilities in code more effectively than traditional static analysis tools. These AI-powered tools could potentially catch subtle patterns that human reviewers or rule-based systems might miss. There have been some recent studies that show even the same AI doesn’t find a vulnerability every time it's prompted. We expect this to change as AI models get better.

- Anomaly Detection: Machine learning algorithms could be employed to detect unusual patterns in build processes, potentially identifying supply chain attacks or compromised systems more quickly than traditional monitoring methods.

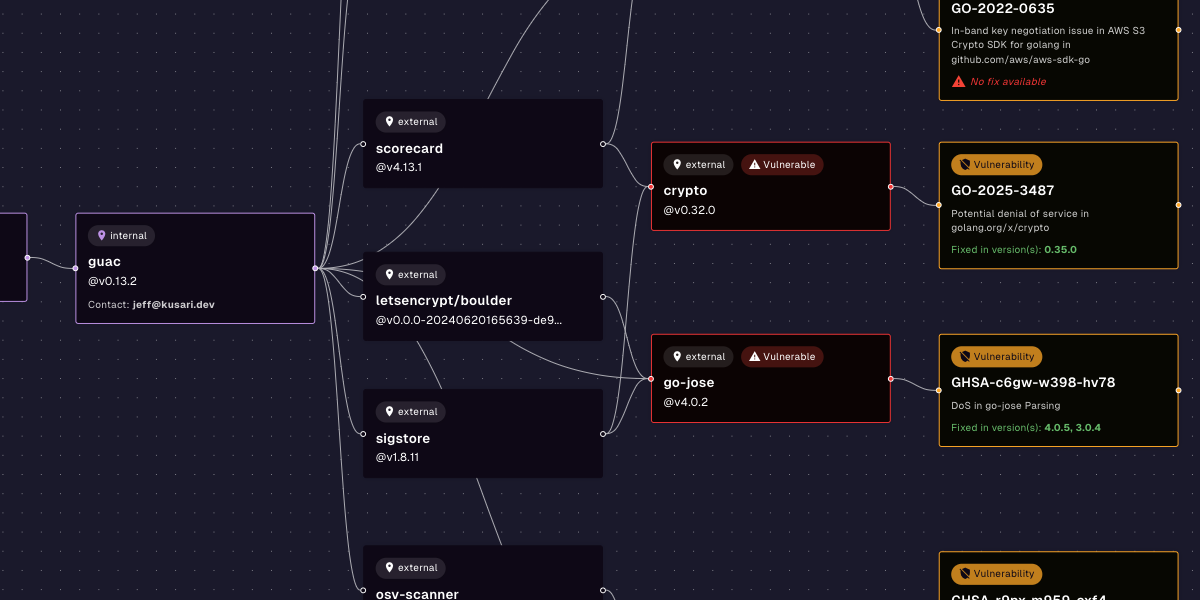

- Automated Dependency Management: AI systems could assist in evaluating and selecting dependencies, potentially identifying safer alternatives or flagging dependencies with suspicious behavior or known vulnerabilities.

- Intelligent Access Control: AI could be used to enhance access control systems, analyzing patterns of behavior to identify potential insider threats or compromised credentials.

- Predictive Maintenance: Machine learning models could predict potential system failures or security risks before they occur, allowing for proactive maintenance and security measures.

Secure Bank would most likely look at anomaly detection as its first step. AI is well-suited for this because anomaly detection is basically looking at data to find statistical outliers and AI is focused heavily on statistics. Secure Bank could use this anomaly detection to help with other things like automated dependency management and code analysis. The bank would be cautious and avoid letting the AI make the decision on failing or succeeding a build just on its own analysis. AI, especially large language models (LLMs), can give differing inputs based on some randomness in how LLMs generate their output. This randomness helps make AI creative, but creates issues for security use cases where determinism is important.

Now let’s look at how to secure the AI models that Secure Bank in turn uses to secure the software factory (or any other purpose like detecting anomalous and potentially fraudulent transactions). They can do this by applying the secure software factory systems and practices to the AI they train.

Here are some practices:

- Versioning and Provenance: Just as with traditional software, AI models and training data should be version-controlled, with clear provenance information maintained throughout the lifecycle. The bank can train their AI and generate SLSA provenance and also record their dependencies like the training set in SBOMs. For this purpose SBOMs are often referred to as AI BOMs.

- Reproducible Builds: The process of training AI models should be made reproducible, allowing for verification of the model's integrity and behavior. This is often difficult to do given the nature of AI training but there are techniques that are evolving to attempt to make the training process more deterministic.

- Secure Data Handling: Training data, which can be sensitive, should be protected using the same rigorous security measures applied to other critical assets in the secure software factory. The bank would want to ensure that the training data is in no way tampered with during the training process. This is an attack vector that has been growing in popularity.

- Model Validation: Rigorous testing and validation processes should be implemented to ensure AI models behave as expected and don't introduce new vulnerabilities or biases. These same processes should also be done through the secure software factory and have signed attestations on what tests have been performed so the bank can know they have adequately tested their AI models.

AI has the potential to be hugely impactful as a tool in the development of secure software, but needs to be used with caution. Since the secure software factory is one of the most critical systems for supply chain security, you should ensure any AI that is used in it goes through the same rigorous practices.

Previous

No older posts

Next

No newer posts

.webp)

.webp)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.jpg)