2026 Predictions: Open Source Accountability, AI Security, and Standardization Will Define the Next Era of Software

For years, software security has lived in the realm of best practices. In 2026, software security stops being theoretical and moves firmly into the realm of requirements.

January 20, 2026

Organizations have talked about securing open source, managing AI risk, and “shifting left.” But this year marks a turning point—where regulation, insurance pressure, and software complexity converge to force real accountability.

Based on what we’re seeing at Kusari—from customer concerns about the EU Cyber Resilience Act (CRA) to helping define the Open Source Project Security (OSPS) Baseline —three shifts will define the year ahead.

1. The Free Ride on Open Source Is Officially Over

The CRA fundamentally changes the rules for any company selling software into Europe.

Unlike US regulations, which often rely on reasonableness and intent, the CRA introduces explicit, enforceable requirements. In the same way that the General Data Protection Regulation (GDPR) significantly changed how companies handle user data, the CRA is a major shift in how companies manage their software supply chain. The CRA requirements are written to ensure that products sold in the EU are secure now and in the future. In short:

- You must know what’s in your software

- You must manage third-party risk

- You are accountable for vulnerabilities—even in open source you didn’t write

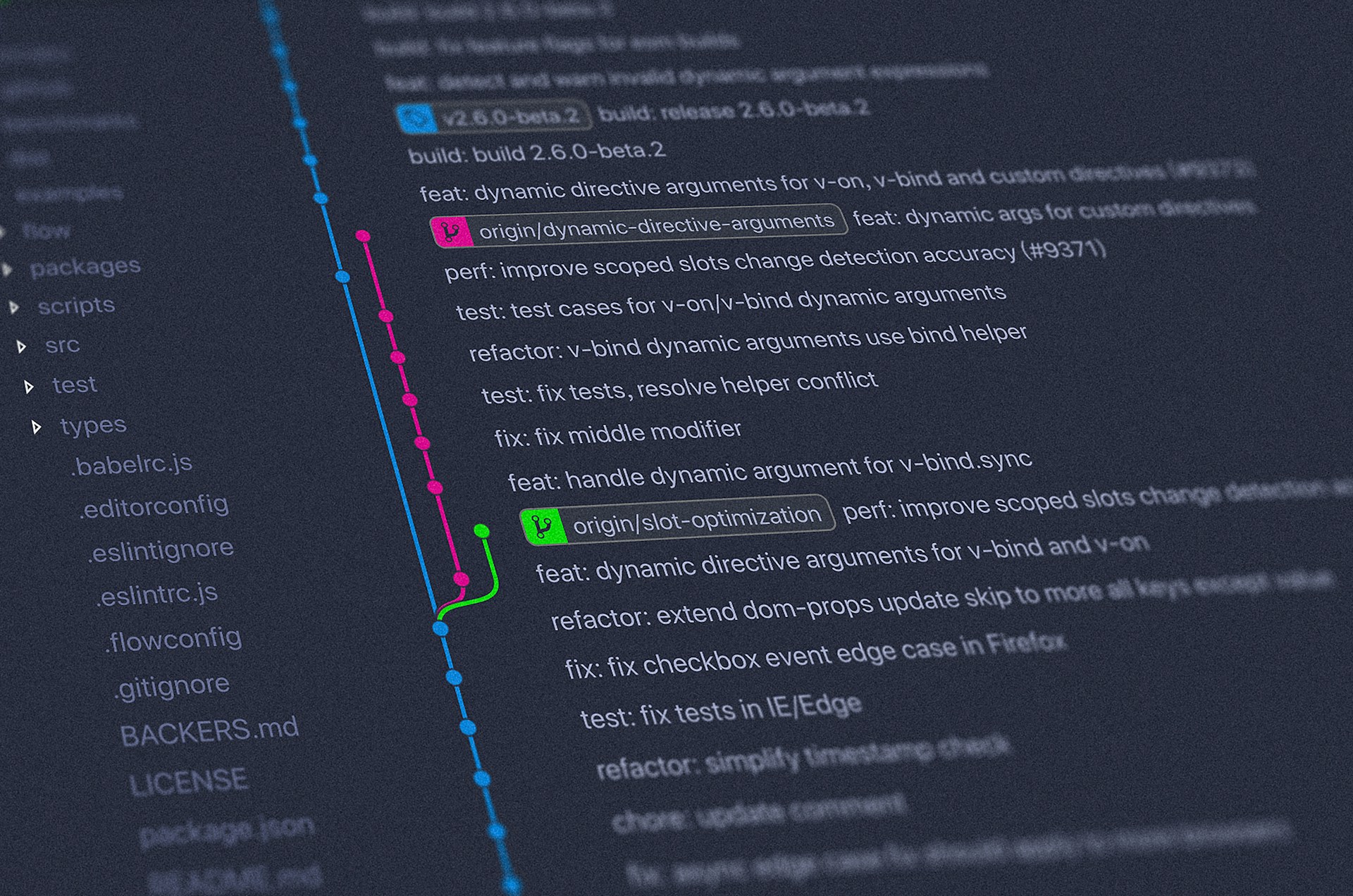

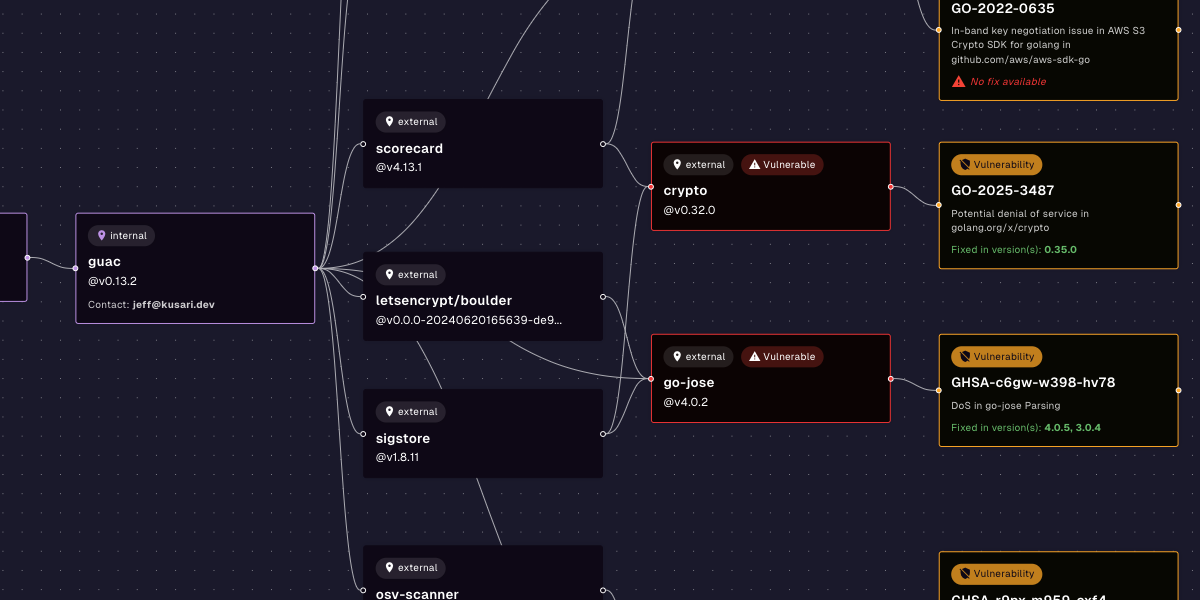

This last point is key. Companies can’t build their empire on top of open source software and then point fingers at underfunded developers when a vulnerability happens. It’s now the companies’ responsibility to vet and secure the code they bring into their products. SBOMs alone don’t tell you how components relate, which dependencies are reachable, or which risks are inherited transitively. Visibility without context is no longer sufficient.

2. Security Standards Will Become an Enabler—Not a Blocker

One uncomfortable truth of CRA compliance is that organizational chaos is expensive.

When every team builds software differently, compliance becomes nearly impossible. That’s why frameworks like the Open Source Project Security Baseline (OSPS) matter—they provide a shared, minimal bar for secure development.

We’re already seeing customers use OSPS-aligned controls to:

- Reduce variance across teams

- Automate security checks earlier

- Respond faster when issues arise

Standardization isn’t about slowing developers down. When done correctly, it removes friction and accelerates response—turning security into a delivery advantage rather than a bottleneck. Practical security requirements that everyone at every stage of the delivery process understands will reduce re-work and speed time to delivery. Just a few basic security controls can eliminate entire classes of potential security issues.

3. AI Security Grows Up

Large language models (LLMs) have been treated as something magical that requires an entirely new security approach. In reality, they’re just software—there’s no need to bypass or reinvent organizational policies. In 2026, AI security will be handled much like any other software component, with LLMs treated as virtual engineers operating within established environments. LLMs will no longer skip organizational security policy; they’ll face the same constraints as any other actor:

- Least-privileges access

- Clear guardrails

- Reviews, approvals, and accountability

We’re already seeing this shift driven by an unexpected force: insurance providers. Many are refusing to underwrite AI-driven products without demonstrable security practices in place. The unbounded risk of unreviewed code from unaccountable models gives the insurance sector nightmares. On the other hand, LLM usage that helps accountable engineers deliver value faster and more securely, by staying within policy and security boundaries, will be widely embraced.

Tools like Kusari reflect this reality by embedding organizational policy and security constraints directly into how AI evaluates risk—ensuring insights are actionable, auditable, and aligned with how teams are already expected to work. AI can help analyze security risk, but only when grounded in:

- Trusted facts

- Business-informed policy

- Domain-specific context

The future isn’t one giant AI model. It’s specialized agents, orchestrated together, mirroring how real security organizations operate.

What This Means for 2026

If there’s one takeaway for the year ahead, it’s this:

Companies have to hold themselves accountable for risk from open source or AI-produced code, or else regulators will hold them accountable.

Regulation, AI, and open source accountability are converging—and organizations that invest in visibility, standards, and automation today will move faster tomorrow.

At Kusari, we’re building that future—where security enables innovation instead of blocking it.

Previous

No older posts

Next

No newer posts

.webp)

.webp)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.jpg)